Customers sometimes ask us- where are my servers? What does the datacentre look like? How do you ensure my sites stay up and working 100% of the time? The nitty-gritty of managed hosting infrastructure is often overlooked, with the finer details abstracted away from developers and wider digital teams, but it’s always reassuring to know that with our fully managed hosting, every angle is covered, and your sites are running on a reliable and well-architected platform.

In normal times, we could offer customers a guided tour of the datacentre itself, so they can see it in all its glory, and many people are fascinated to see the lengths we go to keep sites online 24/7. As we can’t do this at the moment, we thought we’d pop some of our tour material down into writing.

Architecture

It’s no secret that computers are unreliable! Hardware breaks, software behaves unexpectedly, and servers crash. So when scouting out a facility to host our managed hosting platform we needed to keep this inevitable fact in mind, and make sure we had redundancy in place at every point.

This doesn’t just mean servers- but power systems, cooling and network connectivity – the lot – all had to have sufficient backups in place that if a single component (or even multiple components) failed, things kept running, uninterrupted.

Datacentres come in tiers, with each tier dictating what level of redundancy is on offer. Both of our UK facilities are Tier 3, which means they have what’s called N+1 redundancy. This means that for every critical component, a backup system also exists. Tier 3 provides Guaranteed Availability of 99.982%, which allows for just 1.6hrs of downtime per year!

Dual everything

It’s common these days for servers and network equipment to come with two power supplies (and sometimes more!). This means that if one unit dies, the server keeps running because of the other one.

So what happens if there’s a power cut?

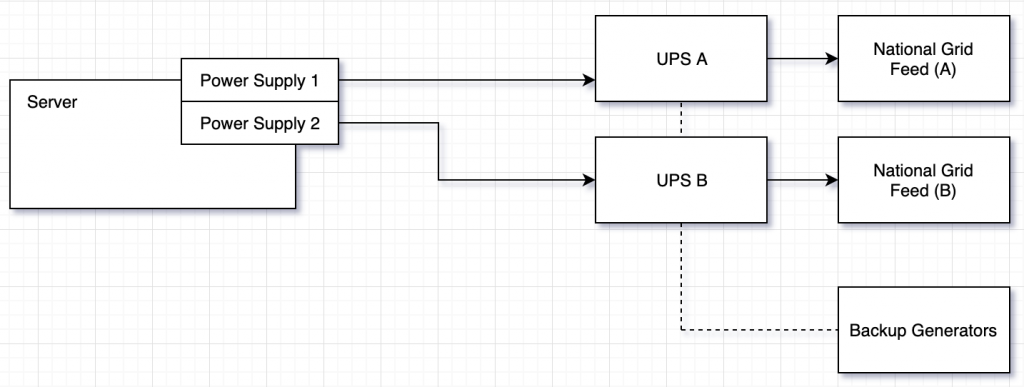

To provide true fault tolerance, we have two of everything : two power supplies, fed from two Power Distribution Units (PDUs), backed by two backup batteries, powered by two independent electricity feeds. Both of these systems are entirely isolated, which ensures that one system going down can’t impact another. The datacentre also has diesel generators with enough fuel on site to run for two continuous weeks, so in the worst case scenario we can operate “off-grid” for some time.

In the event of a power outage on one of these systems, the UPS batteries (think of this as a large backup battery system that servers are connected to) will supply emergency power, which allows time for the generators to spin up. This makes sure that any power disruption doesn’t mean your servers go down. Here’s a simple diagram:

Dual Cooling

As you might imagine, large racks full of IT equipment generate substantial heat. In order to dissipate this and keep servers cool, we use a large HVAC system. As with our power systems, the rule of two comes into play here, and there are two separate systems in operation- loss of cooling can rapidly lead to equipment shutdowns and even damage, so it’s important for us that there are redundancies in place to protect our servers. This also allows for maintenance and testing to take place- for safety, only one unit is operated on at any given time.

Dual Networking

The last critical component in our trio- and one of the most important- is internet connectivity, and again, we use the “A + B” principle to ensure redundancy at all times. The facility operates two comms rooms, (north & south) to ensure that in the event of a fibre cut (yes, it happens…), your sites stay online.

From the comms rooms, where we physically connect to our upstream providers, two core routers connect to- you guessed it- two core switches. From this aggregation layer we connect to customer racks, where servers are connected to our network. And because we have a healthy sense of paranoia about hardware failure we keep spare equipment on site, just in case.

As well as redundancy at the hardware level, we also maintain multi-homed routing to the wider internet, to guard against problems with upstream transit providers. We keep a close eye on network latency and availability so that we can route around any potential issues.

Every component in our infrastructure is rigorously monitored and tested- including the UPS systems, which undergo monthly on-load tests to verify their functionality.

Fire Suppression

One of the lesser-talked-about features of a datacentre is fire detection. To guard against the risk of fire in the datacentre, we use VESDA (Very Early Smoke Detection Apparatus) and FM200 fire suppression gas. FM200 is able to quickly put out fire by absorbing heat, without damaging electrical equipment or causing harm to people who might be working on the datacentre floor.

Security

Back in the before times, when we invited customers to visit our datacentre for a good look around, one of the first things people noticed was how seriously we took security. We’re well equipped here, with:

- Perimeter fencing and anti-ram raid barriers

- Blastproof windows and steel security doors

- Interlocked man-trap doors (we’ve had more than one visitor get stuck in these!)

- Iris recognition for biometric access

- 24/7/365 on-site security patrols.

These measures help keep your data secure from physical intrusion.

Staffing

Our datacentre is staffed around the clock (24/7/365) by both security and trained technical staff. This means we can react quickly to any issue- such as replacing a failed SSD or provisioning new equipment- no matter the time of day. And as with all other areas of the business, we have escalation paths in place so we can have additional members of the team onsite if needed.

Thanks for reading! We’re always happy to dive even deeper if you have follow up questions or comments – just drop us a note via our website. And of course, if you’re interested in seeing things for yourself, we’d love to invite you back on site as soon as things get back to normal!